The EU's AI Act: Implications and Innovation

Ken Forster

The EU's AI Act: Implications and Innovation

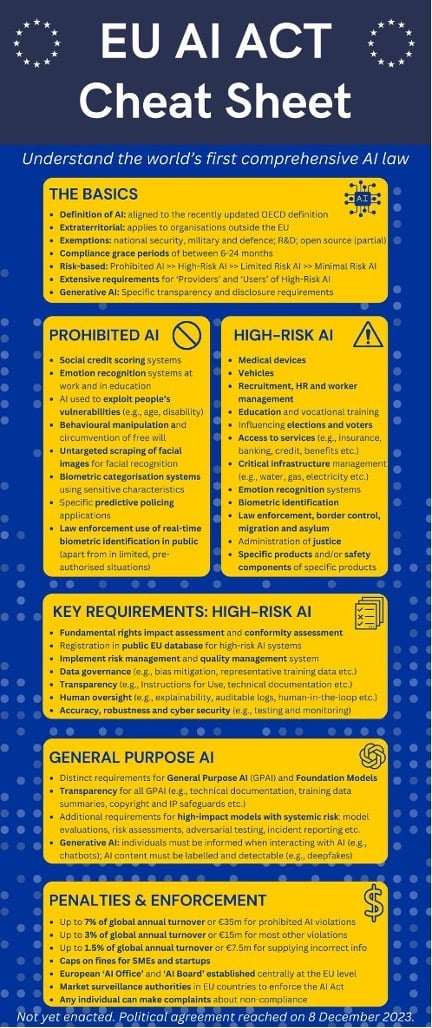

Following a marathon negotiation session on December 8th, 2023, the European Commission reached a political agreement regarding the EU Artificial Intelligence Act. The legislation, first introduced in 2021, is slated to go into effect in 2025 once approved by the European Parliament, marking the first significant regulation of AI by a major global power. The rapid ascent of Generative AI spurred a sense of urgency among EU legislators to complete final negotiations by the end of 2023.

Tiering Regulation Based on Potential Risks

The legislation employs a tiered risk management system, categorizing different types of AI based on the potential impact on health, safety, and human rights. High-risk AI systems, such as those used in critical infrastructure, biometric identification, and law enforcement, will face stricter regulations to mitigate potential risks. The highest risk category is defined by the number of computer transactions needed to train the machine, known as "floating point operations per second" (Flops) – currently, GPT4 is the only existing model that would fall into this new definition. High-risk systems would be required to provide extensive documentation, higher-quality data sets, human oversight, and risk-mitigation systems.

The Minimal Risk classification will include most AI systems, including spam filters, automated recommendation systems, etc., or providers of such systems. Participation in AI codes of conduct will be voluntary, but there will be requirements to disclose the data used to train systems.

Systems in the Unacceptable Risk category deemed to present a direct threat to rights will not be permitted. Systems in this category include predictive policing, workplace emotional recognition systems, manipulation of human behavior to circumvent free will, or categorizing people based on qualities including political or religious persuasion, race, or sexual orientation. Facial recognition technology in law enforcement will be allowed only for limited uses, including the identification of victims of terrorism, human trafficking, and kidnapping.

Addressing Deepfakes, Bots and AI-Generated Content

The Act also will require that deepfakes and AI-generated content be labeled as such; users of chatbots will need to be notified they are interacting with a machine. The new law will ban "social scoring" systems that would evaluate the compliance or trustworthiness of citizens (as dramatized in the Black Mirror Netflix show). Foundation model providers must submit detailed summaries of the training data they use to build their models. Penalties for companies violating the rules include fines ranging from 1.5% to 7% of global sales.

Source: Oliver Patel

Mixed Reactions as Expected

The reception to the finalized regulations is being welcomed in many quarters (particularly by the regulators themselves). Emerging Europe calls the Act "a vision of the future, where artificial intelligence is harnessed safely, ethically, and in alignment with fundamental human rights." Big Tech (Google, Apple, Microsoft, Meta, and other global giants) had pushed back against overreach in regulation, and the lobbying group Digital Europe argues that the regulations will be expensive for AI companies to comply with and risk putting smaller firms, particularly in Europe at a disadvantage.

According to leading AI pioneer Yann LeCun, while legislation ultimately includes restrictions for foundation models, a positive outcome is broad exemptions for open-source models, which are developed using code freely available for developers to alter for their products and tools. Oxford professor Dan McQuillan sees a win for industry: "The whole thing is premised on a risk-based approach. This is a departure from GDPR, which is rights-based with actionable rights. Therefore, it's a huge victory for the industry - it's a product safety regulation that regulates putting AI on the market."

Momenta's Take - What This Means for Innovation

The Act will not go into effect until 2025, but the EU plans to urge companies to voluntarily begin following the rules (though there are no penalties until regulations are in place). The U.S. has yet to implement AI rules, and it is possible there could be efforts to protect American firms.

For companies looking to create solutions based around AI, it's helpful to have defined categories about which solutions will be regulated and to what extent. However, there will be resource challenges in complying with new documentation and verification requirements, particularly for smaller firms and developers working on High-Risk category solutions.

Given that EU consumer advocates had been looking for more restrictive rules, it appears that the new guardrails provide ample maneuvering room for innovation – and with another year before the Act goes into effect, there is time to adjust or pivot as needed. Finally, developers focusing on foundation models should strongly prefer open-source alternatives, as they offer the transparency essential for compliance and potentially result in reduced costs.

Momenta is the leading Industrial Impact venture capital + growth firm. We accelerate entrepreneurs and leaders devoted to the digitization of energy, manufacturing, smart spaces, and supply chains. Since 2012, our team of deep industry operators have made over 100 investments in entrepreneurs and helped scale over 150 industry leaders via our award-winning executive search and strategic advisory practices.